Pharmacovigilance Signal Detector

Analyze Social Media Safety Signals

Enter data from social media monitoring to calculate the likelihood of a valid safety signal. This tool demonstrates how pharmaceutical companies evaluate real-world patient posts.

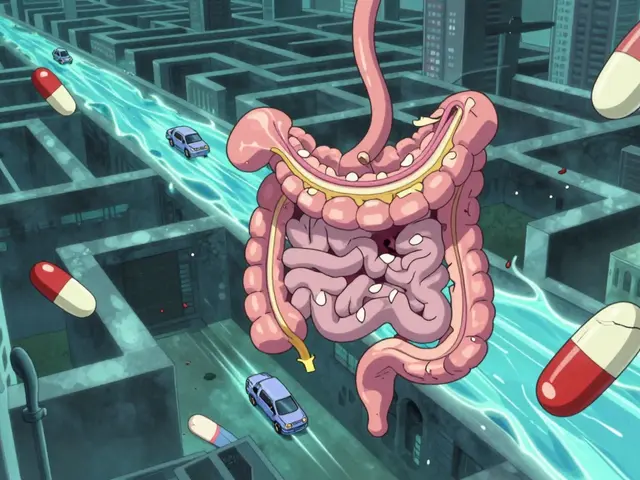

Every day, millions of people post about how their medications make them feel-good, bad, or somewhere in between. They talk about dizziness after taking a new pill, a rash that appeared weeks after starting treatment, or how their depression got worse despite their doctor’s promises. Most of these posts are never meant for regulators or drug companies. But now, they’re being watched. Social media pharmacovigilance is no longer science fiction-it’s a real, growing part of how drugs are monitored for safety.

Why Social Media Matters for Drug Safety

Traditional ways of reporting side effects-like doctors filling out forms or patients calling hotlines-capture only 5 to 10% of actual adverse reactions. That’s not because people aren’t experiencing problems. It’s because reporting is slow, complicated, and often feels pointless. Many patients don’t know how to report, or they assume nothing will be done. Social media changes that. People are already talking. On Reddit, Twitter, Facebook groups, and health forums, they share raw, unfiltered experiences. A woman posts about sudden heart palpitations after starting a new blood pressure med. A man writes that his migraine medicine made him feel like he’s floating. These aren’t clinical trial data. They’re real-time stories from real lives. In 2024, over 5 billion people used social media worldwide. That’s more than half the planet. And they spend over two hours a day on these platforms. For drug safety teams, that’s a massive, untapped stream of information. One case from Venus Remedies showed how social media spotting a cluster of rare skin reactions led to a label update 112 days faster than traditional reporting could have. That’s not just efficiency-it’s potentially life-saving.How It Actually Works (The Tech Behind the Scenes)

It’s not as simple as scrolling through tweets. Companies use AI tools to scan millions of posts every hour. These tools don’t just look for drug names. They use Named Entity Recognition to pull out key pieces: medication brand names, dosages, symptoms, and even slang like "my head feels like it’s spinning" or "I can’t get out of bed since I started this." Then there’s Topic Modeling, which finds patterns without knowing what to look for. If suddenly dozens of users mention "tingling hands" and "brain fog" after taking a newly approved antidepressant, the system flags it-even if no one used the official medical term. AI systems now handle about 15,000 posts per hour with 85% accuracy. That sounds impressive, but here’s the catch: 68% of those flagged posts turn out to be noise. Someone’s joking. Someone’s mixing up two drugs. Someone’s just confused. That means teams still need humans to review every potential signal. And those humans need training. Pharmacovigilance staff typically spend 87 hours learning how to tell the difference between a real side effect and a random rant. They learn medical slang, cultural expressions, and how to spot fake reports.The Big Problems: Noise, Privacy, and Bias

Let’s be honest-social media isn’t a medical record. It’s messy. First, you can’t verify who’s posting. Is that 23-year-old really taking the drug? Did they take the right dose? Do they have kidney disease that makes them more sensitive? In 92% of social media reports, critical medical details are missing. Dosage info is wrong in 87% of cases. And since there’s no way to confirm identity, every single report is unverified. Then there’s the noise. For drugs with fewer than 10,000 prescriptions a year, false positives hit 97%. That’s because rare drugs just don’t get enough mentions to create a clear signal. Meanwhile, popular drugs like metformin or ibuprofen flood the system with irrelevant chatter. Privacy is another minefield. People post about their health without realizing their words might end up in a pharmaceutical company’s safety database. There’s no consent form. No opt-out. A Reddit user named "PrivacyFirstPharmD" summed it up: "I’ve seen patients share deeply personal health details-only to have them harvested without their knowledge." And the data isn’t fair. People without smartphones, internet access, or digital literacy-often older adults, low-income groups, or those in rural areas-are invisible in these systems. That means the safety signals we detect might only reflect the experiences of tech-savvy, urban populations. The rest? Left out.

When It Actually Works: Real Cases

Despite the flaws, social media has delivered real wins. In one case, a nurse on Reddit noticed a pattern: people taking a new antidepressant were reporting severe interactions with St. John’s Wort, a common herbal supplement. Clinical trials hadn’t flagged this. The drug’s label didn’t mention it. But dozens of users described the same reaction-nausea, anxiety spikes, racing heart. The company investigated. Within months, they updated the prescribing information to warn about this interaction. Another example came from a diabetes drug. Social media monitoring picked up early reports of unusual fatigue and low blood sugar episodes. The first formal report to regulators came 47 days later. That head start allowed the company to issue a safety alert before more people were affected. These aren’t rare. According to a 2024 survey, 43% of pharmaceutical companies have detected at least one meaningful safety signal through social media in the last two years. And 78% of companies now use some form of social media monitoring.Regulators Are Watching Too

The FDA and EMA aren’t ignoring this. In 2022, the FDA issued formal guidance saying social media data could be used-but only if it’s validated properly. In 2024, they launched a pilot program with six big drugmakers to test new AI tools that cut false positives below 15%. The EMA now requires companies to document their social media monitoring strategies in their safety reports. That means you can’t just scan tweets and call it done. You need a plan: which platforms you monitor, how you filter data, how you validate findings, and how you protect privacy. This isn’t about replacing traditional reporting. It’s about adding another layer. Think of it like a radar system. Traditional reports are the big blips on the screen-the ones doctors and pharmacies send in. Social media is the faint, flickering signal that might mean something’s coming. It doesn’t confirm danger-but it can tell you where to look.

The Future: AI, Integration, and Ethics

The future of pharmacovigilance isn’t social media alone. It’s social media integrated with traditional systems. Imagine a dashboard where a doctor’s report, a pharmacy’s data, and a Reddit thread all show up together. AI matches them, flags duplicates, and highlights patterns no human would catch. AI will get better. It’ll learn regional slang, understand context better, and get smarter at spotting fake reports. But it won’t replace judgment. Humans will still need to decide: Is this a real signal? Is it urgent? Should we act? Ethics will be the biggest challenge. Can we ethically use public posts without consent? Should patients be told their posts might be monitored? Should there be a way to opt out? These aren’t just legal questions-they’re moral ones. Dr. Sarah Peterson from Pfizer put it well: "Social media gives us a voice we’ve never had before. But we have to earn the right to listen. That means being transparent, responsible, and fair."What This Means for Patients

You don’t need to be a scientist to understand this. If you’re taking a new drug, your experience matters-even if you just tweet about it. Your post might help someone else avoid a bad reaction. Or it might help a company fix a label before more people get hurt. But be careful. Don’t share personal details like your full name, address, or medical record numbers. Use pseudonyms. Know that once it’s online, it’s out there. And if you’re worried about your meds? Talk to your doctor. Don’t rely on social media alone. But do know this: your voice, even in a casual post, is becoming part of the safety net.Bottom Line

Social media pharmacovigilance isn’t perfect. It’s noisy, biased, and ethically tricky. But it’s also powerful. It gives patients a real voice in drug safety. It catches problems faster. It fills gaps traditional systems miss. The key isn’t to replace old methods. It’s to use social media as a tool-carefully, responsibly, and with clear rules. Companies that do it right will build trust. Regulators who set smart guidelines will protect people. And patients? They’ll finally know their experiences aren’t being ignored.The future of drug safety isn’t just in labs and clinical trials. It’s in the posts, tweets, and comments people make every day. The question isn’t whether we should listen. It’s how we listen well.